AI Content Integrity: Solutions for Disinformation and Deepfake Detection in November 2025

Content integrity is a top AI priority in November 2025, as generative models spark waves of synthetic media, deepfakes, and misinformation.

🔍 Why AI Content Integrity Is Trending Now

- Rapid advances in text-to-image and text-to-video tech have produced realistic synthetic content posing risks for brand trust, elections, and regulation.

- New EU AI Act compliance deadlines and policy enforcement require transparent provenance and robust watermarking.

- Platforms are rolling out real-time detection tools, blockchain verification, and AI-powered moderation for user-generated content.

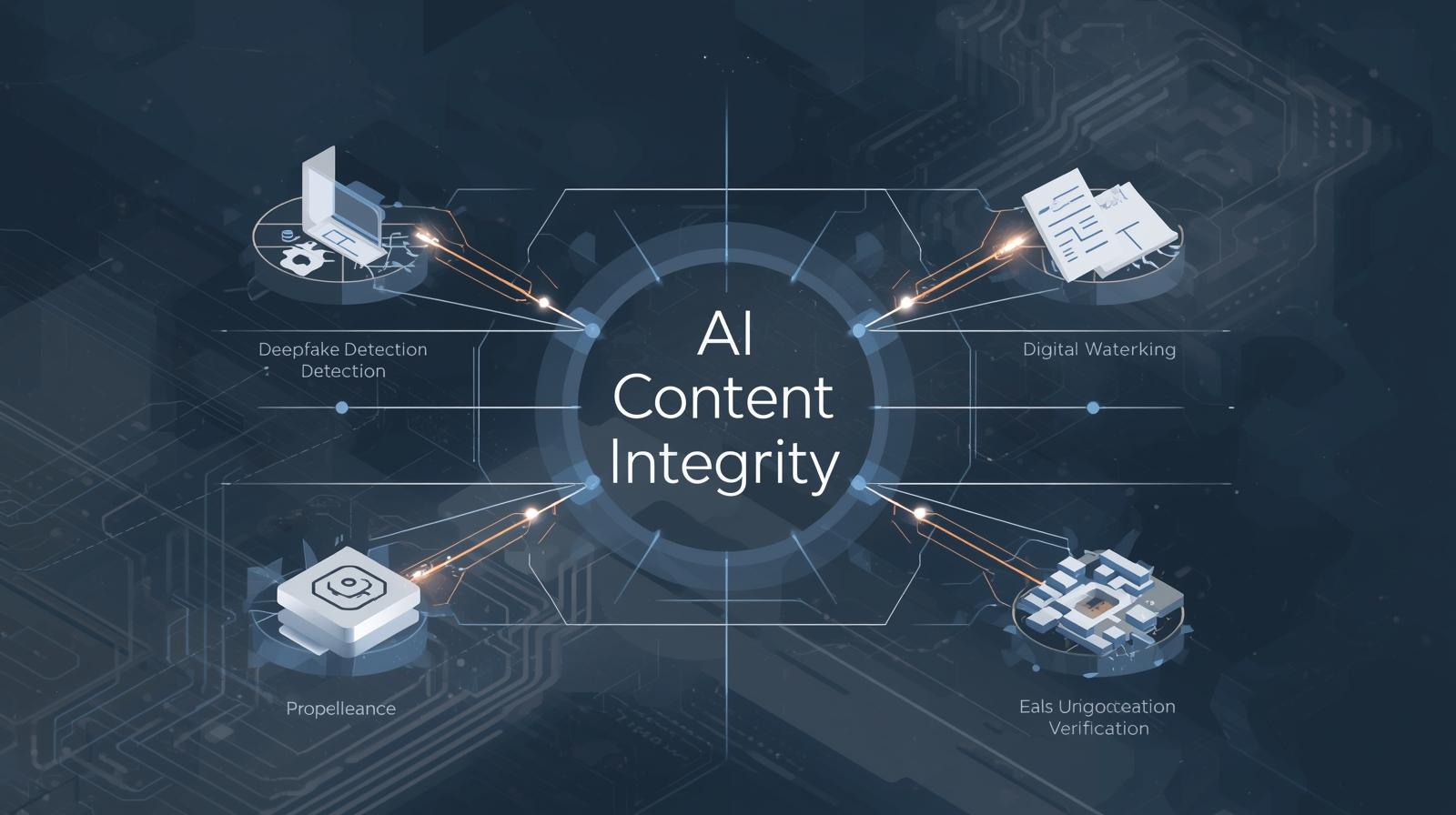

⚙️ Key Technologies and Solutions

- Deepfake detection: Tools analyzing voice, face, and metadata to flag synthetic media.

- Watermarking & provenance: Visible and invisible signals track source, edits, and ownership.

- Automated moderation: AI models filter fake news, flagged assets, and policy violations.

SEO keywords: AI content integrity, deepfake detection 2025, synthetic media risk, EU AI Act compliance, watermarking AI, provenance verification.

Caxtra

Company

Related Articles

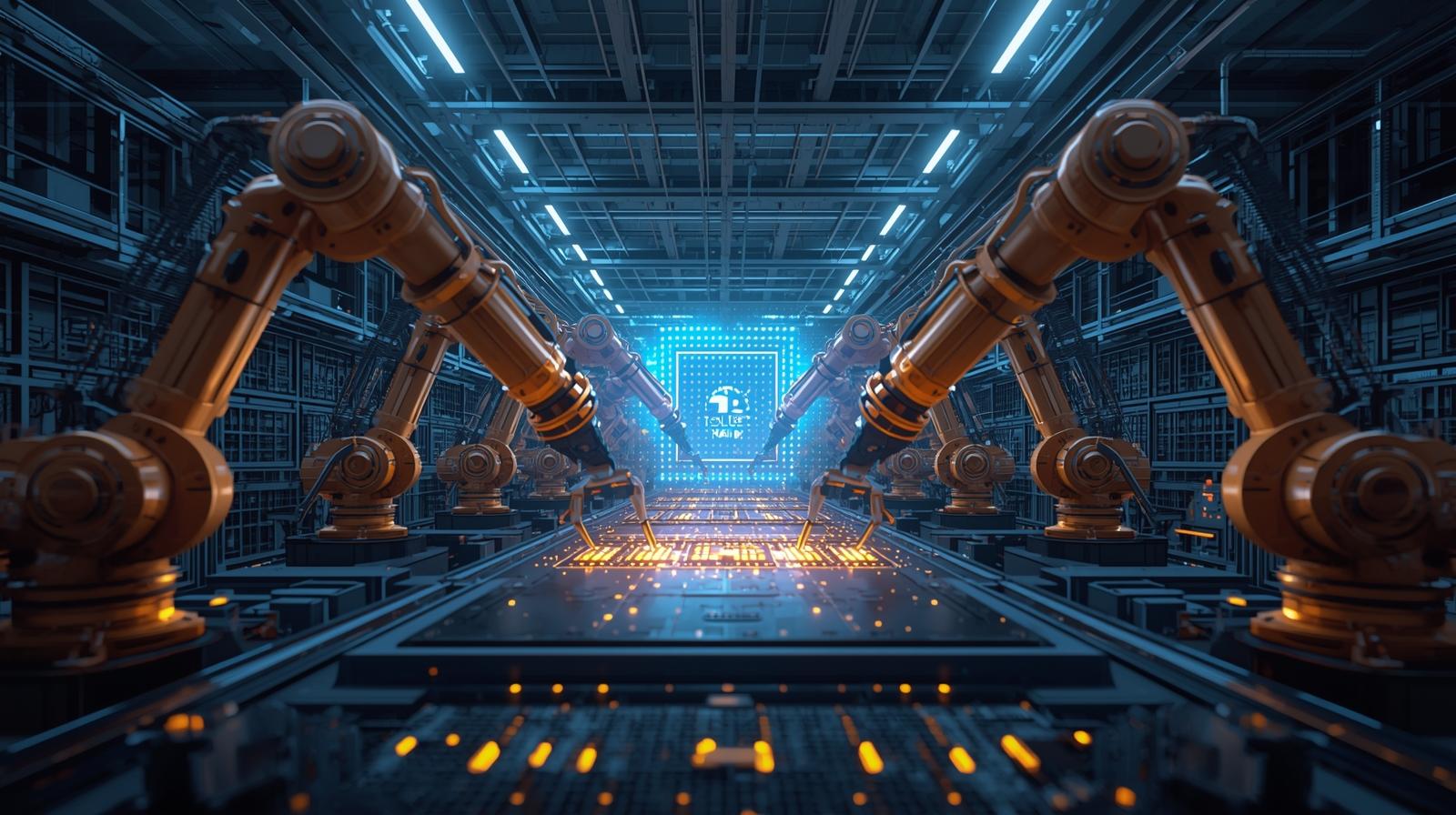

AI Chip Manufacturing Race Heats Up: TSMC, China & Global Supply Chain Battle in February 2026

TSMC dominates 75% of advanced AI chips while China triples production to challenge Nvidia dependency. The global AI chip manufacturing race defines 2026 compute capacity.

Physical AI & Robotics: The Hottest AI Trend Exploding in February 2026

Physical AI is transforming robotics from scripted automation to intelligent, adaptive systems that learn, navigate, and collaborate with humans in real environments.